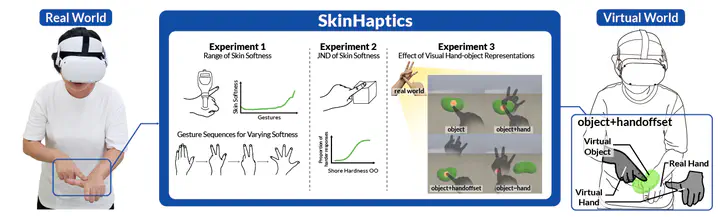

SkinHaptics: Exploring Skin Softness Perception and Virtual Body Embodiment Techniques to Enhance Self-Haptic Interactions

Abstract

Providing haptic feedback for soft, deformable objects is challenging, requiring complex mechanical hardware combined with modeling and rendering software. As an alternative, we advance the concept of self-haptics, where the user’s own body delivers physical feedback, to convey dynamically varying softness in VR. Skin can exhibit different levels of contact softness by altering the biomechanical state of the body. We propose SkinHaptics, a device-free approach that changes the states of musculoskeletal structures and virtual hand-object representations. In this study, we conduct three experiments to demonstrate SkinHaptics. Using the same scale, we measure skin softness across various hand poses and contact points and evaluate the just noticeable difference in skin softness. We investigate the effect of hand-object representations on self-haptic interactions. Our findings indicate that the visual representations have a significant influence on the embodiment of a self-haptic hand, and the degree of the hand embodiment strongly affects the haptic experience.